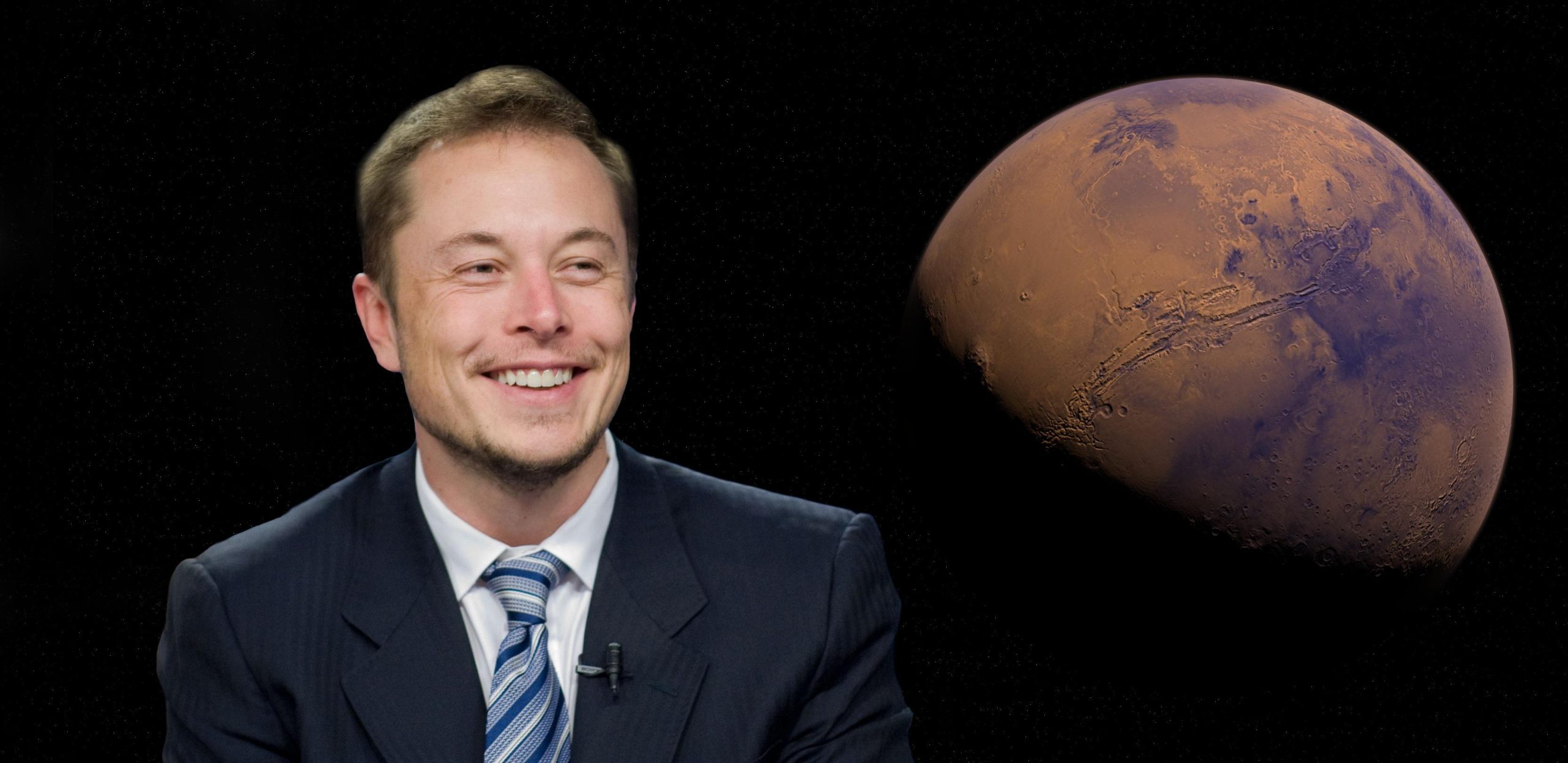

These Democrats Want the FEC To Crack Down on Elon Musk’s Grok

On Monday, a group of Democratic lawmakers sent a letter to the Federal Election Commission (FEC), asking the agency to adopt regulations prohibiting the creation of deepfakes of election candidates.

The letter , signed by seven congressional Democrats, was sparked by recent controversies surrounding Grok, an AI chatbot released on X, the social media platform owned by Elon Musk. Unlike most other popular image-generating AI, Grok is relatively uncensored and will create images of public figures according to user instructions. For example, I was able to get Grok to generate (not particularly convincing) images of Democratic presidential candidate Kamala Harris shaking hands with Adolf Hitler and North Korean dictator Kim Jong Un.

X users have capitalized on this feature mostly for comedic effect. For example, a Grok-generated image of a heavily pregnant Harris paired with a beaming Donald Trump went viral earlier this month.

But concern rose when Trump shared what appeared to be several fake, AI-generated pictures depicting Taylor Swift fans endorsing him for president.

While these images were quickly identified as being false, the lawmakers still called on the FEC to essentially censor Grok and other AI models, writing that “This election cycle we have seen candidates use Artificial Intelligence (AI) in campaign ads to depict themselves or another candidate engaged in an action that did not happen or saying something the depicted candidate did not say,” adding that X had developed “no policies that would allow the platform to restrict images of public figures that could be potentially misleading.”

“The proliferation of deep-fake AI technology has the potential to severely misinform voters, causing confusion and disseminating dangerous falsehoods,” the letter continued. “It is critical for our democracy that this be promptly addressed, noting the degree to which Grok-2 has already been used to distribute fake content regarding the 2024 presidential election.”

The letter was written in support of regulations proposed by a 2023 petition from Public Citizen, a consumer-rights nonprofit. That petition suggested that the FEC clarifythat it violates existing election law for candidates or their employees to use deepfakes to “fraudulently misrepresent” rivals.

A.I. “will almost certainly create the opportunity for political actors to deploy it to deceive voters in ways that extend well beyond any First Amendment protections for political expression, opinion, or satire,” Public Citizen’s petition reads. “A political actor may well be able to use AI technology to create a video that purports to show an opponent making an offensive statement or accepting a bribe. That video may then be disseminated with the intent and effect of persuading voters that the opponent said or did something they did not say or do.”

But is tech censorship really the solution to possibly deceptive images? There’s little reason to think that deepfakes are nearly as big a problem as Big Tech skeptics have warned.

After all, we’ve been pretty good at detecting deepfakes so far. Already-increasing skepticism toward media “may render AI deepfakes more akin to the annoyance of spam emails and prompt greater scrutiny of certain types of content more generally,” Jennifer Huddleston, a senior fellow in technology policy at the Cato Institute, argued during 2023 testimony before the Senate Rules Committee. “History shows a societal ability to adapt to new challenges in understanding the veracity of information put before us and to avoid overly broad rushes to regulate everything but the kitchen sink for fear of what could happen.”